I am a Research Staff Member at MITIBM Watson AI Lab, Cambridge, where I work on solving real world problems using computer vision and machine learning In particular, my current focus is on learning with limited supervision (transfer learning, fewshot learning) and dynamic computation for several computer vision problemsLarge Scale Neural Architecture Search with Polyharmonic Splines AAAI Workshop on MetaLearning for Computer Vision, 21 X Sun, R Panda, R Feris, and K Saenko AdaShare Learning What to Share for Efficient Deep MultiTask Learning Conference on Neural Information Processing Systems (NeurIPS ) Deep Learning Learning What To Share For Efficient Deep MultiTask Learning AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy

논문 리뷰 Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips

Adashare learning what to share for efficient deep multi-task learning

Adashare learning what to share for efficient deep multi-task learning-Click to share on Twitter (Opens in new window) Click to share on Facebook (Opens in new window) AdaShare Learning What To Share For Efficient Deep MultiTask Learning Learning to Retrieve Reasoning Paths over Wikipedia Graph for Question Answering(ICLR underAdaShare Learning What To Share For Efficient Deep MultiTask Learning X Sun, R Panda, R Feris, and K Saenko NeurIPS See also Fullyadaptive Feature Sharing in MultiTask Networks (CVPR 17) Project Page

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

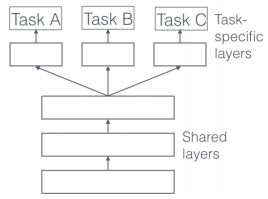

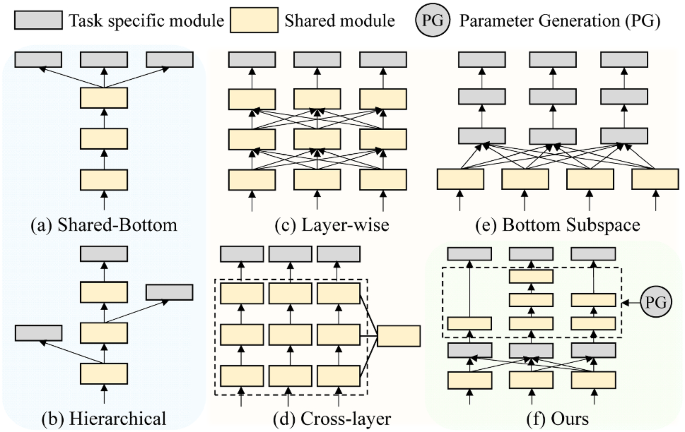

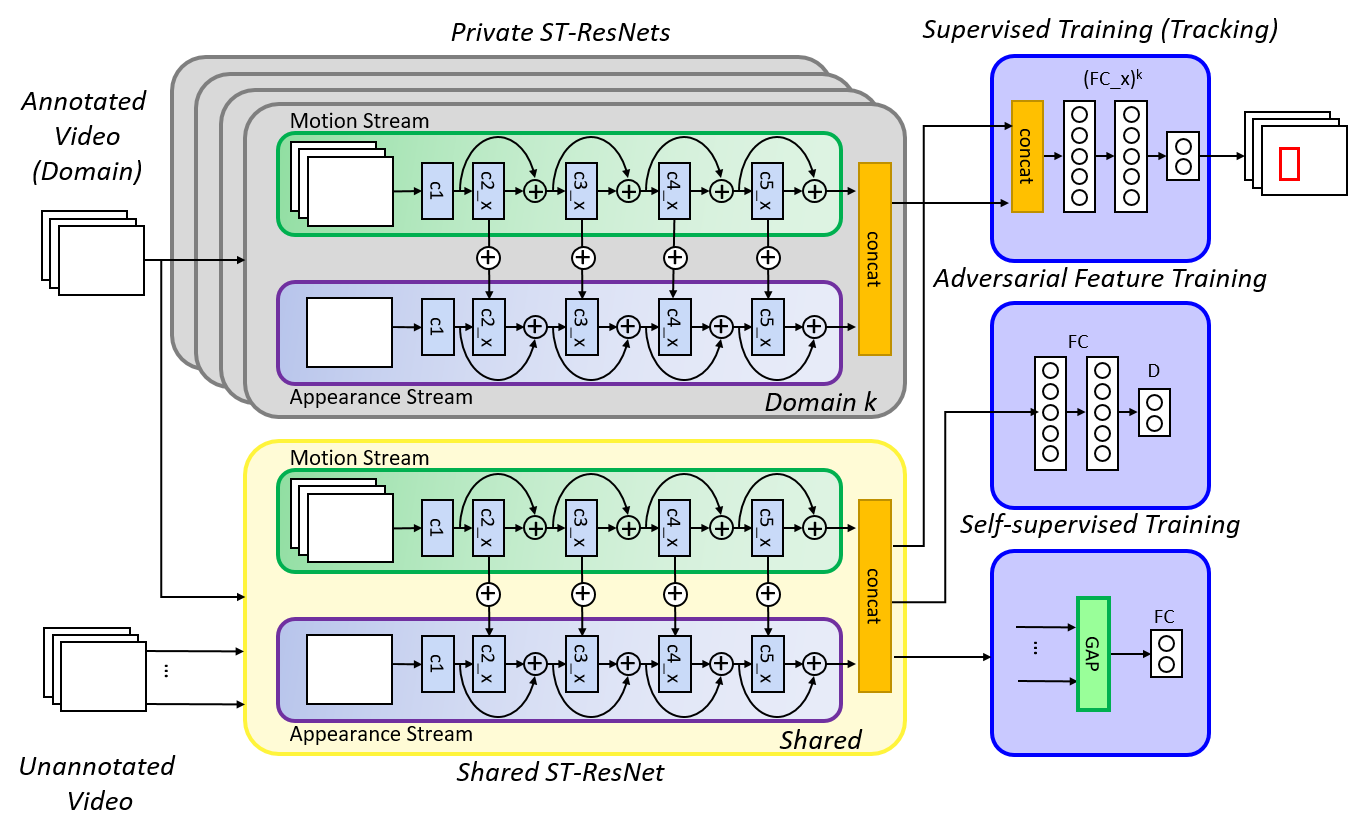

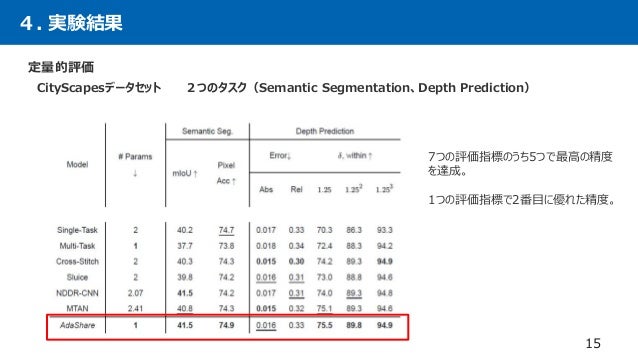

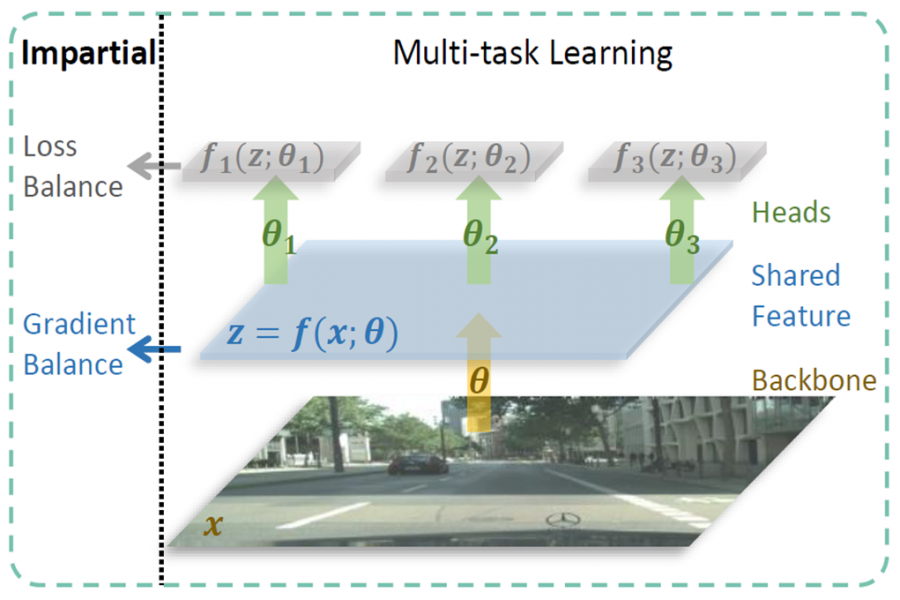

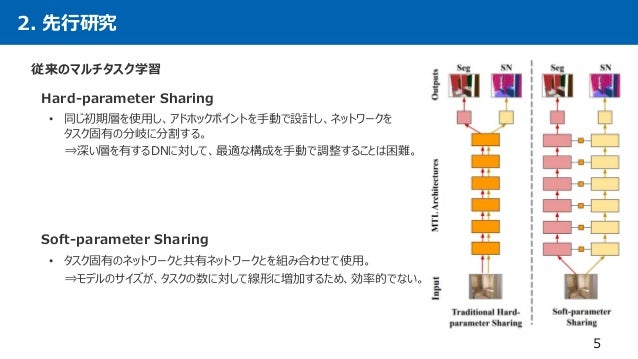

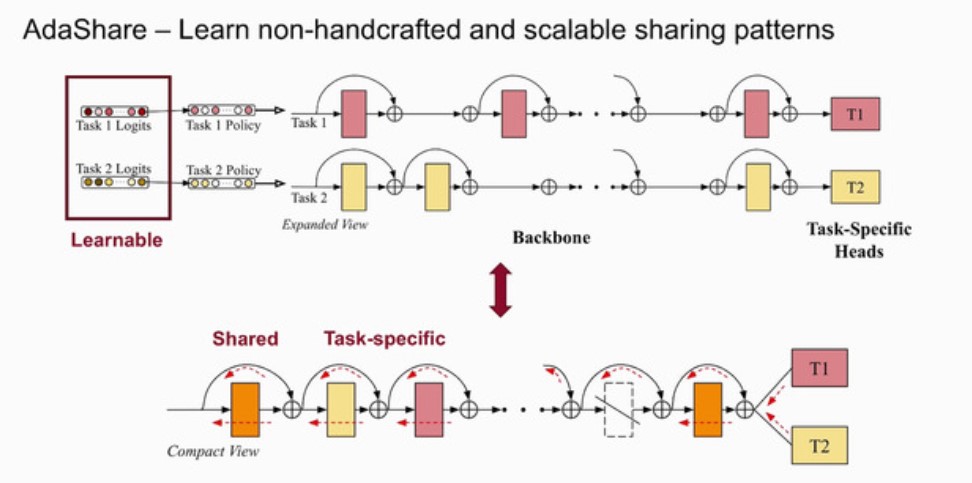

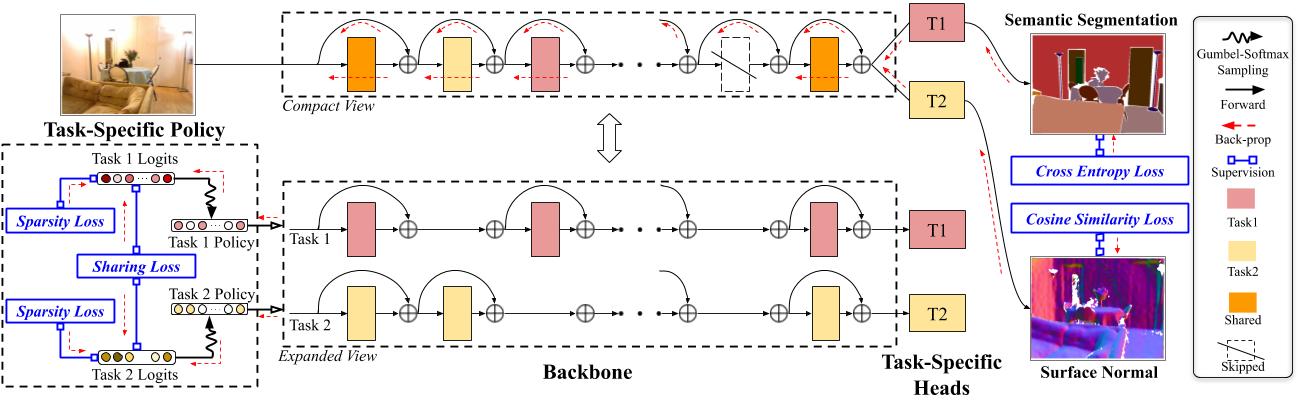

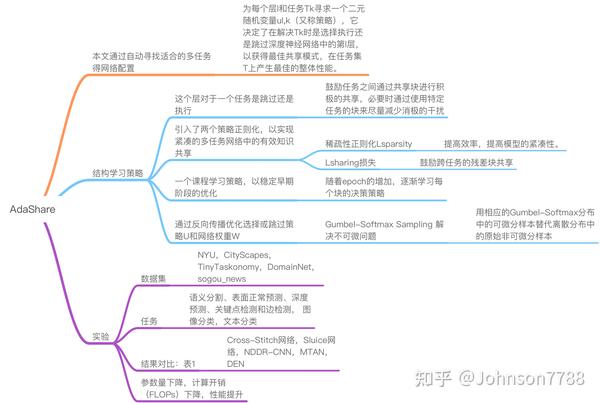

Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through AdaShare Learning What To Share For Efficient Deep MultiTask Learning NIPS , ()Typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides whatAdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko Neural Information Processing Systems (NeurIPS), Project Page Supplementary Material

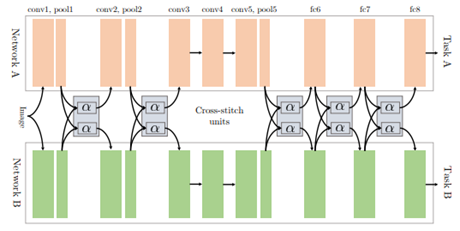

9 Dec AIR Seminar "AdaShare Learning What To Share For Efficient Deep MultiTask Learning" 9 Dec Poster Session "Computational Tools for Data Science" 10 Dec Writing Business Proposals – A Seminar for Faculty Learning to predict multiple attributes of a pedestrian is a multitask learning problem To share feature representation between two individual task networks, conventional methods like CrossStitch and Sluice network learn a linear combination ofMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism

AdaShare Learning What To Share For Efficient Deep MultiTask Learning (Supplementary Material) X Sun, R Panda, R Feris, K Saenko The system can't perform the operation nowThis paper reports work in progress on learning decision policies in the face of selective labels The setting considered is both a simplified homogeneous one, disregarding individuals' features to facilitate determination of optimal policies, and an online one, to balance costs incurred in learning with future utilityPolyharmonic Splines AAAI Workshop on MetaLearning for Computer Vision, 21 12 X Sun, R Panda, R Feris, and K Saenko AdaShare Learning What to Share for Efficient Deep MultiTask Learning Conference on Neural Information Processing Systems (NeurIPS ) 13 Y

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

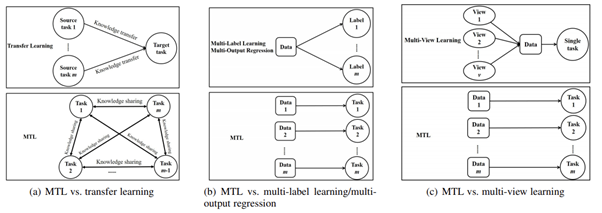

The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that MultiTask learning is a subfield of Machine Learning that aims to solve multiple different tasks at the same time, by taking advantage of the similarities between different tasks This can improve the learning efficiency and also act as a regularizer which we will discuss in a while Formally, if there are n tasks (conventional deep learning

Multi Task Learning學習筆記 紀錄學習mtl過程中讀過的文獻資料 By Yanwei Liu Medium

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

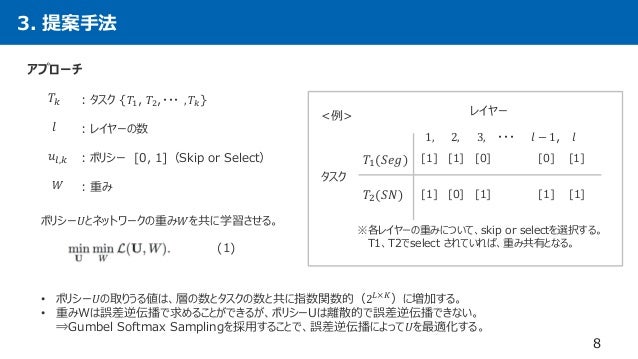

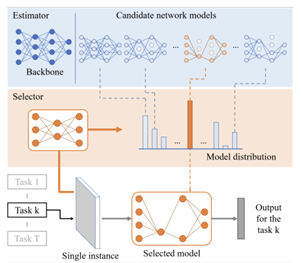

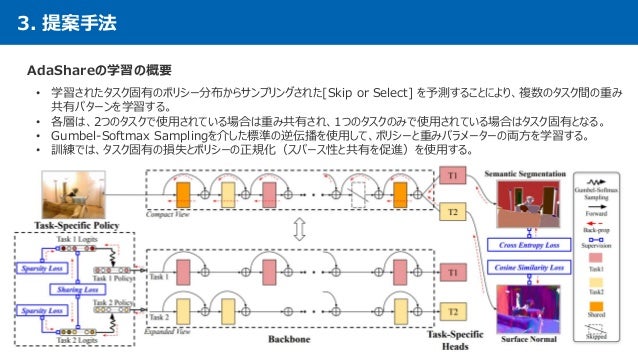

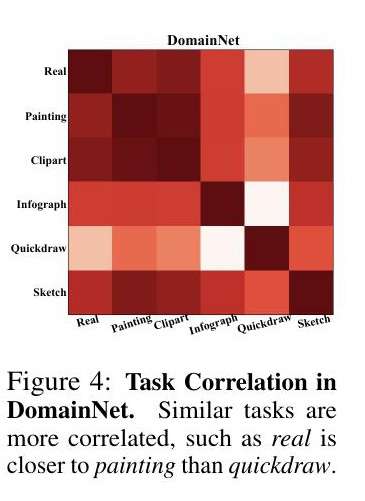

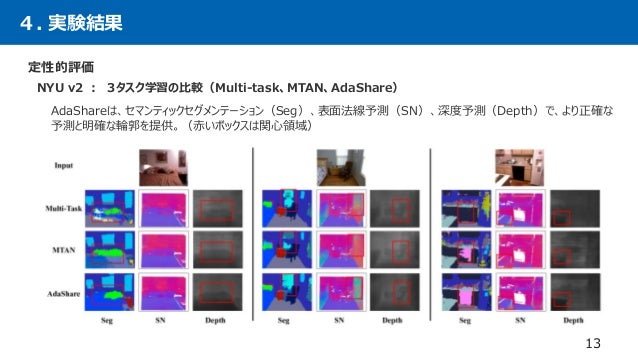

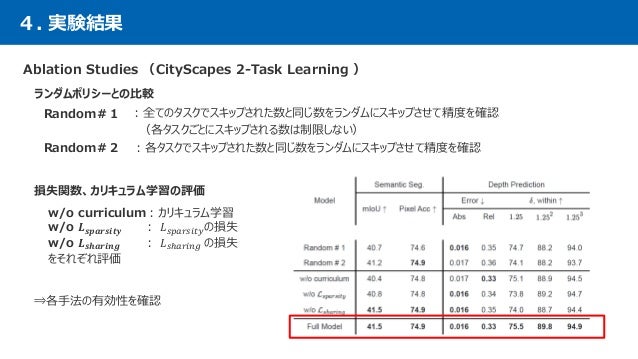

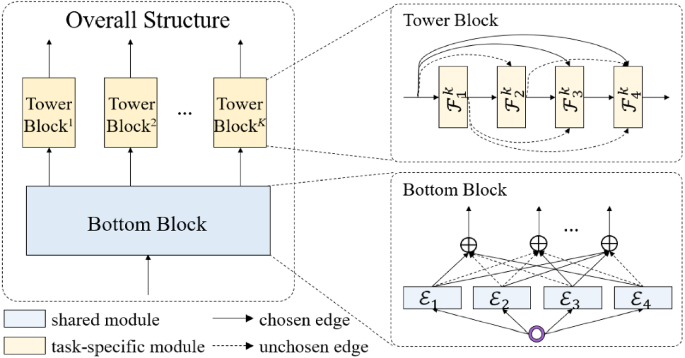

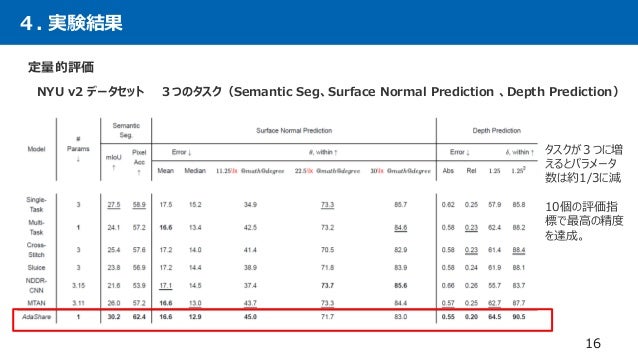

Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learningwith deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called \textit{AdaShare}, that decides what to share AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible Our main idea is to learn the sharing pattern through a taskspecific policy that selectively chooses which layers to execute for a given task in the multitask Clustered multitask learning A convex formulation In NIPS, 09 • 23 Zhuoliang Kang, Kristen Grauman, and Fei Sha Learning with whom to share in multitask feature learning In ICML, 11 • 31 Shikun Liu, Edward Johns, and Andrew J Davison Endtoend multitask learning with attention In CVPR, 19

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Rpand002 Github Io Data Neurips Pdf

Computer Vision Machine Learning Artificial Intelligence Articles Cited by Public access Adashare Learning what to share for efficient deep multitask learning X Sun, R Panda, R Feris, K Saenko 19 25 19 Arnet Adaptive frame resolution for efficient action recognition Y Meng, CC Lin, R Panda, P Sattigeri, L Karlinsky, APoster AdaShare Learning What To Share For Efficient Deep MultiTask Learning » Ximeng Sun Rameswar Panda Rogerio Feris Kate Saenko Poster Rewriting History with Inverse RL Hindsight Inference for Policy Improvement » Ben Eysenbach XINYANG GENG Sergey Levine Russ Salakhutdinov Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what to share across which tasks to achieve the best recognition accuracy, while taking resource efficiency into account

Arxiv Org Pdf 09

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Deep MultiTask Learning – 3 Lessons Learned We share specific points to consider when implementing multitask learning in a Neural Network (NN) and present TensorFlow solutions to these issues of data science for kids or 50% off hardcopy By Zohar Komarovsky, Taboola9 Dec AIR Seminar "AdaShare Learning What To Share For Efficient Deep MultiTask Learning" 9 Dec Poster Session "Computational Tools for Data Science" 10 Dec Writing Business Proposals – A Seminar for FacultySharing approach, called AdaShare, that decides what to share across which tasks for achieving the best recogni layers to execute for a given task in the multitask net In the context of deep neural networks, a fundamen

Kate Saenko On Slideslive

논문 리뷰 Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips

AdaShare Learning What To Share For Efficient Deep MultiTask Learning #1517 Open icoxfog417 opened this issue 1 comment Open AdaShare Learning What To Share For Efficient Deep MultiTask Learning #1517 icoxfog417 opened this issue 1 comment Labels CNN ComputerVision AdaShare Learning What to Share for Efficient Deep MultiTask Learning (X Sun et al, ) EndtoEnd MultiTask Learning with Attention (S Liu et al, 19) Which Tasks Should Be Learned Together in Multitask Learning? Deep Learning JP Discover the Gradient Search home members;

The Outputs Of Our Pipeline A The Results Of Object Detection B Download Scientific Diagram

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Abstract Multitask learning (MTL) is an efficient solution to solve multiple tasks simultaneously in order to get better speed and performance than handling each singletask in turn The most current methods can be categorized as either (i) hard parameter sharing where a subset of the parameters is shared among tasks while other parameters are taskspecific; AdaShare Learning What To Share For Efficient Deep MultiTask Learning Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that Expand 6 2To overcome this, we introduce Sluice Networks, a general framework for multitask learning where trainable parameters control the amount of sharing including which parts of the models to share

Papertalk

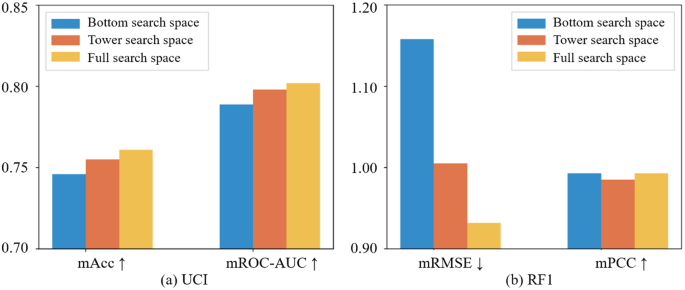

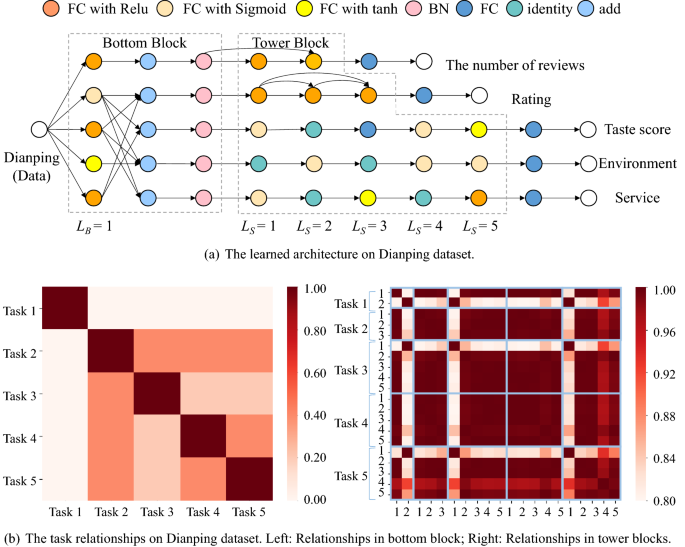

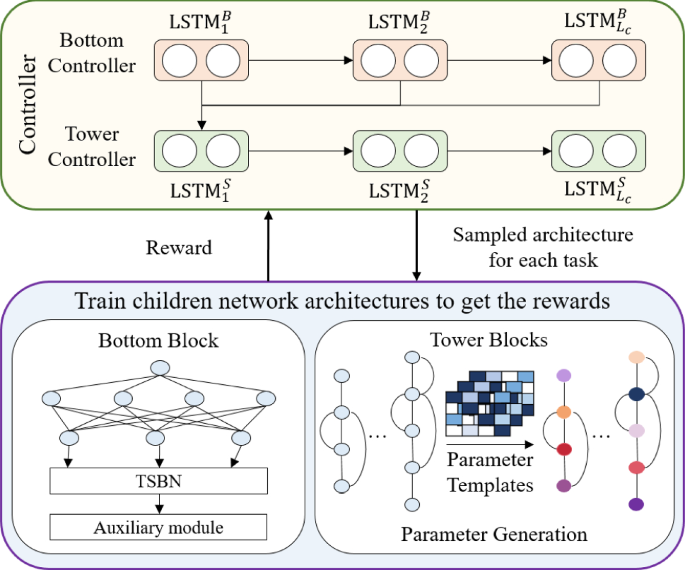

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

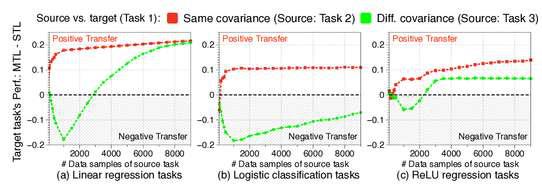

Multitask Learning and Beyond 过去,现在与未来 在涅贵不缁,暧暧内含光。 近期 Multitask Learning (MTL) 的研究进展有着众多的科研突破,和许多有趣新方向的探索。 这激起了我极大的兴趣来写一篇新文章,尝试概括并总结近期 MTL 的研究进展,并探索未来对于 MTLAdaShare Learning What To Share For Efficient Deep MultiTask Learning AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting theAdaShare Learning What To Share For Efficient Deep MultiTask Learning Introduction Hardparameter Sharing AdvantagesScalable DisadvantagesPreassumed tree structures, negative transfer, sensitive to task weights Softparameter Sharing AdvantagesLessnegativeinterference (yet existed), better performance Disadvantages Not Scalable

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

The performance of multitask learning in Convolutional Neural Networks (CNNs) hinges on the design of feature sharing between tasks within the architecture The number of possible sharing patterns are combinatorial in the depth of the network and the number of tasks, and thus handcrafting an architecture, purely based on the human intuitions of task relationships can be timeMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, calledAdaShare, that decides what to share AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko link 49 Residual Distillation Towards Portable Deep Neural Networks without Shortcuts Guilin Li, Junlei Zhang, Yunhe Wang, Chuanjian Liu, Matthias Tan, Yunfeng Lin, Wei Zhang, Jiashi Feng, Tong Zhang link 50

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Learning What To Share For Efficient Deep Multi Task Learning

MultiTask Learning with Joint Feature Learning •One way to capture the task relatedness from multiple related tasks is to constrain all models to share a common set of features •For example, in school data, the scores from different schools may be determined by a similar set of features 26 Feature 1 Feature 2 Feature 3 Feature 4 FeatureAwesome MultiTask Learning This page contains a list of papers on multitask learning for computer vision Please create a pull request if you wish to add anything If you are interested, consider reading our recent survey paper MultiTask Learning forGuo et al, CVPR 19 Data Efficiency Transfer Learning §Finetuning is arguably the most widely used approach for transfer learning §Existing methods are adhoc in terms of determining where to fine tune in a deep neural network (eg, finetuning last k layers) §We propose SpotTune, a method that automatically decides, per training example, which layers of a pretrained model

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Home Rogerio Feris

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Multitask learning is an open and challenging problem in computer vision Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what to share across which tasksThe typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what 이번에는 NIPS Poster session에 발표된 논문인 AdaShare Learning What To Share For Efficient Deep MultiTask Learning 을 리뷰하려고 합니다 논문은 링크를 참조해주세요 Background and Introductio

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

How To Do Multi Task Learning Intelligently

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Papertalk The Platform For Scientific Paper Presentations

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Rpand002 Github Io Data Neurips Pdf

Openreview Net Pdf Id Howqizwd 42

Arxiv Org Pdf 1905

Cs People Bu Edu Sunxm Adashare Adashare Poster Pdf

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Papertalk

Multi Task Learning And Beyond Past Present And Future Programmer Sought

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Adashare 高效的深度多任务学习 知乎

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Papertalk The Platform For Scientific Paper Presentations

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Arxiv Org Pdf 09

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Multi Task Learning And Beyond Past Present And Future Programmer Sought

How To Do Multi Task Learning Intelligently

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Dl Acm Org Doi Pdf 10 1145

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Learning To Branch For Multi Task Learning Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Arxiv Org Pdf 09

Rpand002 Github Io Data Neurips Pdf

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Home Rogerio Feris

Multi Task Learning學習筆記 紀錄學習mtl過程中讀過的文獻資料 By Yanwei Liu Medium

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Cs People Bu Edu Sunxm Adashare Neurips Slides Pdf

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Branched Multi Task Networks Deciding What Layers To Share Deepai

Rpand002 Github Io Data Neurips Pdf

Papertalk

Rpand002 Github Io Data Neurips Pdf

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Openreview Net Pdf Id Pauvowaxtar

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Github Simonvandenhende Awesome Multi Task Learning A List Of Multi Task Learning Papers And Projects

A List Of Multi Task Learning Papers And Projects Pythonrepo

Kate Saenko On Slideslive

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Arxiv Org Pdf 09

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Cs People Bu Edu Sunxm Adashare Neurips Slides Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Papers With Code

How To Do Multi Task Learning Intelligently

Multimodal Learning Archives Mit Ibm Watson Ai Lab

Adashare 高效的深度多任务学习 知乎

Aci Institute

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Http Www2 Agroparistech Fr Ufr Info Membres Cornuejols Teaching Master Aic Projets M2 Aic Projets 21 Learning To Branch For Multi Task Learning Pdf

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Request Pdf

Rpand002 Github Io Data Neurips Pdf

Openaccess Thecvf Com Content Cvpr21w Ntire Papers Jiang Png Micro Structured Prune And Grow Networks For Flexible Image Restoration Cvprw 21 Paper Pdf

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Http Proceedings Mlr Press V119 Guoe Guoe Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

How To Do Multi Task Learning Intelligently

Multi Task Learning And Beyond Past Present And Future Programmer Sought

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

How To Do Multi Task Learning Intelligently

0 件のコメント:

コメントを投稿